Thank you for what I'm certain is a vast oversimplification; I'm not well educated on AI as a whole. I'm not against AI as a whole: that would be small minded. But I'm hesitant to be exited either; I'll carry on with all the human emotions that are appropriate when dealing with something new . And hold my opinions proper for when her new Voicebank comes out. My only comment I believe I have is regarding the ability to sound more "human" that you mentioned, I am fond of the somewhat robotic sound of Vocaloids and Utaus. But as long as that ability is preserved I see little reason to complain.I'll do my best to explain!

As context for anyone who doesn't know, traditional vocal synths are made using the 'concatenative' method. A singer is hired to sing all the individual letter sounds of a language, called phonemes, at various pitches. These short recordings of vowels and consonants are then downloaded onto your computer when you buy a concatenative Vocaloid. Then, the Vocaloid software puts the phoneme recordings together to make the words you type into the program. It is a simple method that allows you to make a program that can say any word without you needing to record every word in the dictionary.

AI vocal synths use machine learning to create the voicebank. Instead of recording phonemes, the singer sings whole songs. Then you label where each phoneme is in each song, so the software knows how to read them. After labelling, the AI software learns the sonic qualities that give the singer their unique timbre and pronunciation. These rules are saved into a much smaller file, then applied dynamically by the software, making the output sound like the singer. Rather than simply playing a pre-recorded sound, the software calculates how a word should be sung by applying the rules it has learnt from 'listening' to the singer. Rather like a robotic impressionist. There's a lot more complex stuff happening under the hood, for example, you can feed the AI data from multiple different singers to increase the range of one voice, and make them sing fluently in multiple languages by mixing the 'rules' for English with the 'rules' for a non-native singer's timbre.

However, AI does not sacrifice your ability to make a voice sound unique. In fact, I believe AI voices are easier to edit and can achieve greater variety in performance compared to concatenative voices, because you're not restricted to the singer's recordings. If you push their settings, you can make one AI voice sound like multiple completely different people! The reason AI usage online can be samey is a by-product of convenience. AI voices sound natural out of the box, so producers feel less of a need to edit the results. Consequently, a lot of producers use AI voices at their default settings.

Most concatenative voicebanks sound a lot less polished by default, and require more hard work to sound natural. When you tune a concatenative voice, you can hear a big improvement in its results, which motivates producers to edit them by hand. This forces more users to develop their own unique style.

VOCALOID Hatsune Miku VOCALOID6

- Thread starter MagicalMiku

- Start date

I've found from playing around with GUMI V6 that I get better results by putting in a phrase, selecting all of the notes, and then going to the inspector and dragging down all of the pitch drift and vibrato settings until she sounds like a robot (like a concatenative bank with pitch snap on), then slowly reintroducing those parameters until it sounds natural. Otherwise V6 draws wild pitch curves and adds weird vibrato, so GUMI ends up sounding very "strained" and thin.The reason AI usage online can be samey is a by-product of convenience. AI voices sound natural out of the box, so producers feel less of a need to edit the results. Consequently, a lot of producers use AI voices at their default settings.

To expand on how the machine learning works (I work in software engineering, currently adjacent to some ML applications...not training models, but setting up infrastructure for some small ones): it's basically just a bundle of statistical math. Instead of storing hundreds of short recordings, you're basically producing a distilled essence of what each sound the voice bank can produce "looks sort of like." e.g. a "ka" sound has one kind of waveform overall, and when it runs into a "t" sound it looks kind of like such and such. The "training" process is basically just crunching a lot of numbers until you have a glorified spreadsheet that you can plug a desired word into and get a mathematical curve out (and that's all a sound is: a compound sine wave traveling through air).

If you record someone saying "cat" several times, the results will all look very similar, and are hypothetically predictable mathematically. It's very hard to do that by hand, but there are now computing tools that can automate that, basically.

So a V2-V4 voice bank is: the user typed "cat" so look up a sound file for "ka" and one for "t" and stitch them together, and hopefully they will gel together instead of sounding bad.

An "AI" voicebank is: the user typed "cat" so let's input those into a statistical pipeline that will predict what the waveform those make will look like.

I probably should have excluded V6 from the "AI sounds natural by default" statement haha.I've found from playing around with GUMI V6 that I get better results by putting in a phrase, selecting all of the notes, and then going to the inspector and dragging down all of the pitch drift and vibrato settings until she sounds like a robot (like a concatenative bank with pitch snap on), then slowly reintroducing those parameters until it sounds natural. Otherwise V6 draws wild pitch curves and adds weird vibrato, so GUMI ends up sounding very "strained" and thin.

in the Episode 2 "Music x Technology" of Crypton 30th Anniversary special interview (that I translated here):

vocaverse.network

vocaverse.network

there is a detailed explanation about Miku Vocaloid 6, what they are aiming for and how is going with the development together with YAMAHA:

for example:

for example:

VOCALOID - Crypton Future Media 30th Anniversary: special interview

Crypton Future Media 30th Anniversary: special interview (translated from the original interview in japanese, available here:) https://www.wantedly.com/companies/crypton/post_articles/992716 Today, July 21st, 2025, marks the 30th anniversary of Crypton Future Media's founding! To commemorate...

there is a detailed explanation about Miku Vocaloid 6, what they are aiming for and how is going with the development together with YAMAHA:

Also, President Ito of Crypton Future Media confirmed a nice thing:--By the way, I'm sure there are people reading this who are wondering, so I'll ask you... How is the progress of "Hatsune Miku V6 AI" going?

Sasaki : Regarding the "challenges that are currently difficult to overcome as we proceed with development," which we reported on the SONICWIRE blog in December 2024, we are now seeing a clue to solving them with the cooperation of YAMAHA. We are proceeding with development so that we can make it possible for you to try the new features in some form by 2025, so we apologize to everyone who is looking forward to it, but we would appreciate it if you could wait for a little while longer.

I recommend to read the full Episode 2 of the interview, it has so many interesting details and talks about many thingsIto : When it comes to new initiatives such as "NT" and "V6 AI," "Hatsune Miku" always takes the lead, but of course we are also thinking about other virtual singers. It's taking time, but we hope you will continue to watch over the developments going forward.

- As mentioned earlier, there are more and more situations where AI technology is being used these days, such as "Hatsune Miku V6 AI". Some creators are uneasy about AI, for example, because they are worried that generative AI may learn their work without their permission. Could you take this opportunity to tell us what your company thinks about AI?

Ito : Because the image of generative AI is prevalent, it is often seen as the enemy of creators due to problems such as unauthorized learning, but AI is not just generative AI. For example, if you combine AI with music understanding technology, it will be possible to have AI find music that perfectly suits your tastes. If we can provide a music discovery service using such AI technology, it will not only be convenient for listeners, but it will also create opportunities for creators to listen to their music.

I think of AI as a new pen tool, and I think it is a technology that can expand the possibilities of music depending on how it is used.

Sasaki : I think that the pen tool is an analogy of an everyday tool used by craftsmen and students alike, and I think it is a good expression. Although the product name does not have "AI" in it, the singing voice synthesis software "Hatsune Miku NT (Ver.2)" that we updated in March this year actually incorporates AI. I think many people have the image that "AI-based singing synthesis software = software that can make a singer sound just like a human", but we want to value "singing voices that retain the characteristics of a virtual singer" that are different from humans, so rather than using AI to reproduce realistic singing voices, we incorporate AI as a tool to make creators' work more efficient.

Last edited:

Crypton Sonicwire booth at Magical Mirai 2025 in Tokyo, where you can buy Crypton products and try Miku NT ver.2, and..and..!! New product announcement confirmed for August 31st!!

(the talking session will be very interesting!)

(the talking session will be very interesting!)

SONICWIRE will also be appearing on the special exhibition stage!

It seems something will be announced at the "Virtual Singer-related Stage" on August 31st...?

Virtual Singer Product Stage

Date and Time: Sunday, August 31, 2025, 11:00-11:45

Stage Introduction: We will look back on the Virtual Singer products that Crypton Future Media has released so far and talk about future developments.

-Hiroyuki Ito (CEO, Crypton Future Media, Inc.)

-Wataru Sasaki (Crypton Future Media, Inc.)

-Masamichi Koizumi (Crypton Future Media, Inc.)

Last edited:

For the other US people, tonight at:

7PM Pacific

8PM Mountain

9PM Central

10PM Eastern

For UK, August 31:

3AM BST

Hopefully we'll see some good news posted online soon after!

7PM Pacific

8PM Mountain

9PM Central

10PM Eastern

For UK, August 31:

3AM BST

Hopefully we'll see some good news posted online soon after!

Miku V6 first half of 2026 release date set, voice library will be Japanese and English!

(Chinese version in the works too!)

- Starter package will include Vocaloid 6 Editor with some exclusive features made for Miku V6

- first live demo very impressive! Reminds me of V4X expressions with the cuteness of Miku V2, wow..<3

- Miku NT ver.2 and Miku V6 can be used together to achieve what all the producer wanted. There is a very good balance of features between NT ver.2 and V6, they are different features and yet can sound so nice together.

- Second live demo is same song of first, but without the melody in background, so it was nice to hear Miku voice. So soft and sweet voice<3

- Vocaloid 6 Editor Lite available on Sonicwire from today! More details in a official Sonicwire blog post to be published later.

- Crypton Future Media and Yamaha are working hard for Miku V6, and in the coming weeks there will be more details! (official artwork and product page will be unveiled once the release date has been decided)

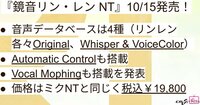

- Kagamine Rin/Len NT!

- Still in Beta version, but features like Automatic Control already working well. Release date set for October 15th, product pages will be live soon with all the details.

(Chinese version in the works too!)

- Starter package will include Vocaloid 6 Editor with some exclusive features made for Miku V6

- first live demo very impressive! Reminds me of V4X expressions with the cuteness of Miku V2, wow..<3

- Miku NT ver.2 and Miku V6 can be used together to achieve what all the producer wanted. There is a very good balance of features between NT ver.2 and V6, they are different features and yet can sound so nice together.

- Second live demo is same song of first, but without the melody in background, so it was nice to hear Miku voice. So soft and sweet voice<3

- Vocaloid 6 Editor Lite available on Sonicwire from today! More details in a official Sonicwire blog post to be published later.

- Crypton Future Media and Yamaha are working hard for Miku V6, and in the coming weeks there will be more details! (official artwork and product page will be unveiled once the release date has been decided)

- Kagamine Rin/Len NT!

- Still in Beta version, but features like Automatic Control already working well. Release date set for October 15th, product pages will be live soon with all the details.

Last edited:

- Package and download versions will be available. Artist iXima in charge of Rin and Len NT designs:

Kagamine Rin/Len NT main features, release date and retail price:

- Miku NT ver.2 is the base to develop new features for all Piapro characters, and as they said last year, those empty boxes in the background mean something.. Miku V6 will be based on V3 and V4X voicebank, with the voice cuteness of Miku V2 <3

Miku V6 will be based on V3 and V4X voicebank, with the voice cuteness of Miku V2 <3

- Thanks so much for all the support from the fans and the creators!

Kagamine Rin/Len NT main features, release date and retail price:

- Miku NT ver.2 is the base to develop new features for all Piapro characters, and as they said last year, those empty boxes in the background mean something..

- Thanks so much for all the support from the fans and the creators!

Last edited:

Interesting. The Miku song they played a few times has the classic vocaloid "whirrp" sound. (I always liken it to a synthesizer amp envelope opening/closing just a little too slowly.) Sounds great overall.

I can't believe no one has just put a timestamp?

With BGM:

Acapella:

With BGM:

Acapella:

Last edited:

updated the first post with the latest information announced during the talking session:

- Miku V6 will be released in the first half of 2026, Crypton Future Media and Yamaha confirmed during the talking session at Magical Mirai in Tokyo. They also confirmed that Miku V6 is based on V3 and V4X voicebanks, with the cuteness of Miku V2 voice. Two demos (very impressive demos) were played live on the Vocaloid 6 Editor, which will have new features made for Miku V6. Languages supported are Japanese and English (Chinese confirmed in development).

I hope they can make her English clearer! I think English Miku usage would pop off if she was still robotic and accented, but nonetheless easy to understand without subtitles.

I was hoping they'd leave the English to crosslingual synthesis this time... I really wanted to hear what she'd sound like if she was the same as in Japanese.

I agree! Miku English V3 and V4's phonemes can sound strange sometimes when certain combos are used, which massively lets down both the quality and the clarity of the banks. (It's hard to explain, but I've noticed Miku English has a bad tendency to either over- or underenunciate words if you don't edit them, which can throw native English speakers like me off.) If her V6 English turns out to be similar to Teto's on SynthV, I think Miku English songs would finally gain momentum.I hope they can make her English clearer! I think English Miku usage would pop off if she was still robotic and accented, but nonetheless easy to understand without subtitles.

See, I agree, which is why they should stop making dedicated English voicebanks and use crosslingual like Synth V. Then the pronunciation will be perfect.I agree! Miku English V3 and V4's phonemes can sound strange sometimes when certain combos are used, which massively lets down both the quality and the clarity of the banks. (It's hard to explain, but I've noticed Miku English has a bad tendency to either over- or underenunciate words if you don't edit them, which can throw native English speakers like me off.) If her V6 English turns out to be similar to Teto's on SynthV, I think Miku English songs would finally gain momentum.

from my understanding, it looks as if their plan is to give miku extra english and chinese data with what they already have for creating those respective previous banks - not to give her specific banks in those languages but to use that existing data to make the xls stronger, like stardust sv. given how they're trying to recreate a very specifically 'miku' sound, it makes sense they would likely want her other languages to still have some parity with the previous english and chinese voicebanks she had by reusing *that* data as well.I was hoping they'd leave the English to crosslingual synthesis this time... I really wanted to hear what she'd sound like if she was the same as in Japanese.