Cevio's twitter account posted an image of takahashi... takahashi cevio ai teaser? maybe?

They only posted him because it's Hinamatsuri (March 3rd) in Japan. 3/3, and they have a trio of characters which makes up another 3 (Sasara and Tsudumi are also mentioned in the tweet).

There isn't really anything more to it - he's singled out because it's also Girl's Day, and Takahashi isn't a girl.

Just a cute little thing to acknowledge the character, but it isn't related or tied to any sort of update confirmation at all - Techno Speech seems more the company to just straight up announce them rather than tease them in any way. They announced Sasara and Tsudumi's updates out of nowhere, I don't see them suddenly teasing one for Takahashi instead of just announcing it outright.

There isn't really anything more to it - he's singled out because it's also Girl's Day, and Takahashi isn't a girl.

Just a cute little thing to acknowledge the character, but it isn't related or tied to any sort of update confirmation at all - Techno Speech seems more the company to just straight up announce them rather than tease them in any way. They announced Sasara and Tsudumi's updates out of nowhere, I don't see them suddenly teasing one for Takahashi instead of just announcing it outright.

So Kamitsubaki posted a sort of blog post explaining Sekai's design choices amongst other things:

Most interestingly they've confirmed that there are plans for ALL five of the Virtual Witch Phenomenon singers to get AI banks. Seems like we can also expect Cevio AI song voices based on Rim, Harusaruhi and Koko in the future

Most interestingly they've confirmed that there are plans for ALL five of the Virtual Witch Phenomenon singers to get AI banks. Seems like we can also expect Cevio AI song voices based on Rim, Harusaruhi and Koko in the future

Rim is going to be interesting, I've always find her oddly similar to KAF but less husky and more brightly colored, down to her 3 worded name. Is she going to be RIMU?

I'm interested to hear how they turn out! they all have their own unique vocal quirks that I'm excited to hear in the editor.

RIM also has a really nice tone in English too! even if it's unlikely, I'd absolutely love if they did something with it.

RIM also has a really nice tone in English too! even if it's unlikely, I'd absolutely love if they did something with it.

I’m kinda late to the discussion, but I’ve just heard that SEKAI demo and woo, I really like her tone! Her high notes kinda remind me of Kafu’s(could it be the engine noise(?)), but I find her low/middle range to be really peculiar and distinctive. Her design is really cool too, it’s simple yet quite effective and recognizable.

I’m also super excited for the possibility of other Kamitsubaki singers; I gave a listen to Rim and feeling I’m already a fan of her !

!

I’m also super excited for the possibility of other Kamitsubaki singers; I gave a listen to Rim and feeling I’m already a fan of her

Harusaruhi is my personal favorite among the V.W.P., which is why I'm more worried about how are they going to translate her rapping skills to CeVIO. Harusaruhi's style have a lot of rap, which is tonally is different from normal singing and way more fast paced.

My personal hope would be that they release two AI voicebanks separately (or in a bundle) for song and rap. I think it'd be a great opportunity for them to have the first rap-oriented commercial AI bank in their roster, plus CeVIO's tuning interface lends itself incredibly well to tuning rap in my experience (Giga's CH4NGE instantly comes to mind). Alternatively, they could do what they're doing with SEKAI and give her a unique parameter for flipping between styles.

I'm curious for Koko too, she has a very emotional voice and I'd like to see how that translates in CeVIO AI.

I'm curious for Koko too, she has a very emotional voice and I'd like to see how that translates in CeVIO AI.

Last edited:

They can get a Casio keyboard with the old Cevio voice tech to rap well enough. Hopefully the AI version with more refinements can be more believable enough.

Plus with the bigger budget Cevio team now have compared to 5 years ago, they better use all that money for research and upgrades.

Plus with the bigger budget Cevio team now have compared to 5 years ago, they better use all that money for research and upgrades.

Last edited:

I imagine a rap VB would be very similar to any other genre-specialised voicebank, where using it within its intended parameters yields fantastic results, but anything outside of it is... less-than-stellar, to say the least.

I’m hoping the Emotion parameter becomes a staple and is continuously built upon, because the ability to pull from different parts of a dataset to fit the tonal and emotional requirements of a song would be quite incredible. Of course it would require them to record data for 2, 3, or however many emotions they want, but i imagine the payoff would be huge. I imagine it’d help massively with the tonal variety of rap too.

I’m hoping the Emotion parameter becomes a staple and is continuously built upon, because the ability to pull from different parts of a dataset to fit the tonal and emotional requirements of a song would be quite incredible. Of course it would require them to record data for 2, 3, or however many emotions they want, but i imagine the payoff would be huge. I imagine it’d help massively with the tonal variety of rap too.

Wow, I wasn't knowing much this circle of artists but they seem all talented =o Also I agree, this English is neat

Sometiems I wish if one day, CeVIO may have an in-between mode, that would allow more natural bridges between singing and talking, and justly be a nice effect on slamming, rapping and other specific tonal modes from voices (kinda like what you obtain when you use KotonoSync onto voiceroids without any song or Takahashi/Tsuzumi in their current state)

Sometiems I wish if one day, CeVIO may have an in-between mode, that would allow more natural bridges between singing and talking, and justly be a nice effect on slamming, rapping and other specific tonal modes from voices (kinda like what you obtain when you use KotonoSync onto voiceroids without any song or Takahashi/Tsuzumi in their current state)

I do hope when that happens, a 2-in-1 bank with emotional Parameters won't be a pain in the processing unit, and take a day to render.

Though I wonder if Cevio can apply something like selective genre filters, similar to Adobe Photoshop where many different effects are layered on top and controlled with masking and opacity. But very easier to understand and don't require a degree to learn.

Though I wonder if Cevio can apply something like selective genre filters, similar to Adobe Photoshop where many different effects are layered on top and controlled with masking and opacity. But very easier to understand and don't require a degree to learn.

I really really hope Harusaruhi's VB holds out for rap! She's done a fair bit of more standard signing work too (she sang the OP for Chikyugai Shonen Shojo, for instance) and it would be a shame to see her bank relegated to a style she's less known for. But either way, I think it's great all of the VWP signers are getting VBs! All of their voices are amazing!

Wow, just heard them five and mygad, they truly all have beautiful singing voices !!

This is not related to the updates nor to any of the upcoming banks, but this is still news. Basically all schools in japan can use the Speech software for free, until 2023.

CeVIO will provide speech synthesis software to schools free of charge in the 4th year of Reiwa

CeVIO will provide speech synthesis software to schools free of charge in the 4th year of Reiwa

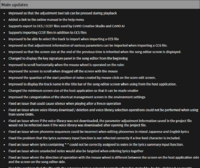

Cevio AI version 8.31.8.0 has been released, adding Natsuki Karin, "Paste only parameters", and minor bug fixes